AlexNet 程式碼展示

""""

該程式由賴俊霖所撰寫

""""

import tensorflow as tf

from tensorflow import keras

from keras.utils import np_utils

import numpy as np

from tensorflow.keras import datasets,layers,models,losses

from keras.utils import to_categorical

from tensorflow.keras.utils import to_categorical

import numpy as np

import matplotlib.pyplot as plt

import gzip

import os

import matplotlib

# 下載中文支援字型。後面畫圖需要

zhfont = matplotlib.font_manager.FontProperties(fname='./fashion_mnist_data/SimHei-windows.ttf')

# 解析解壓得到四個訓練的資料

def read_data():

files = [

'train-labels-idx1-ubyte.gz', 'train-images-idx3-ubyte.gz',

't10k-labels-idx1-ubyte.gz', 't10k-images-idx3-ubyte.gz'

]

# 在當前的目錄下建立資料夾,裡面放入上面的四個壓縮檔案

current = './fashion_mnist_data'

paths = []

for i in range(len(files)):

paths.append('./fashion_mnist_data/'+ files[i])

with gzip.open(paths[0], 'rb') as lbpath:

y_train = np.frombuffer(lbpath.read(), np.uint8, offset=8)

with gzip.open(paths[1], 'rb') as imgpath:

x_train = np.frombuffer(

imgpath.read(), np.uint8, offset=16).reshape(len(y_train), 28, 28)

with gzip.open(paths[2], 'rb') as lbpath:

y_test = np.frombuffer(lbpath.read(), np.uint8, offset=8)

with gzip.open(paths[3], 'rb') as imgpath:

x_test = np.frombuffer(

imgpath.read(), np.uint8, offset=16).reshape(len(y_test), 28, 28)

return (x_train, y_train), (x_test, y_test)

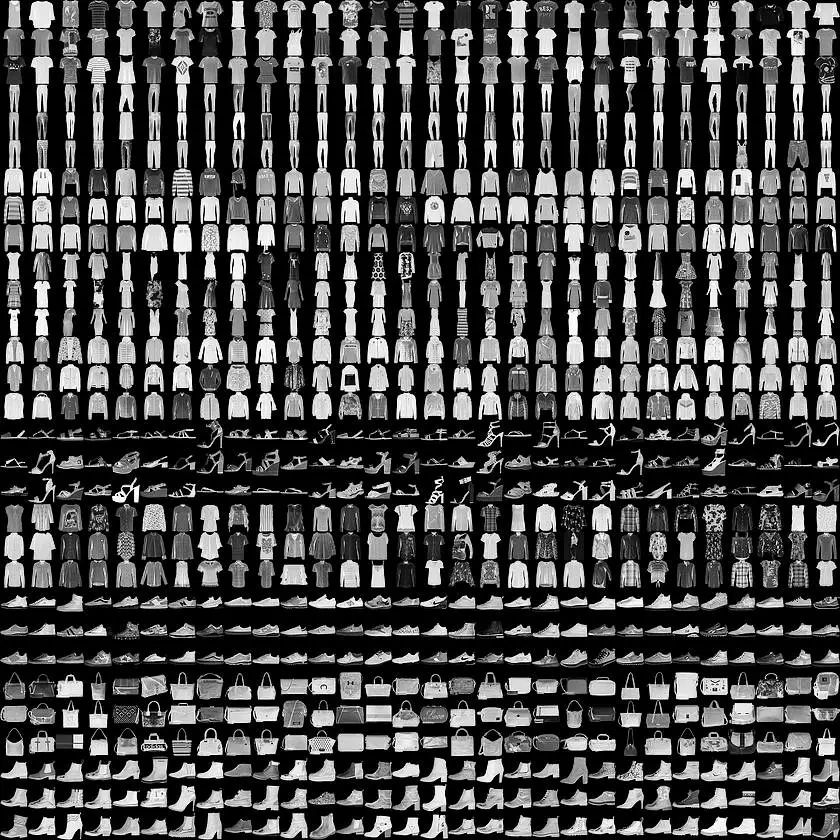

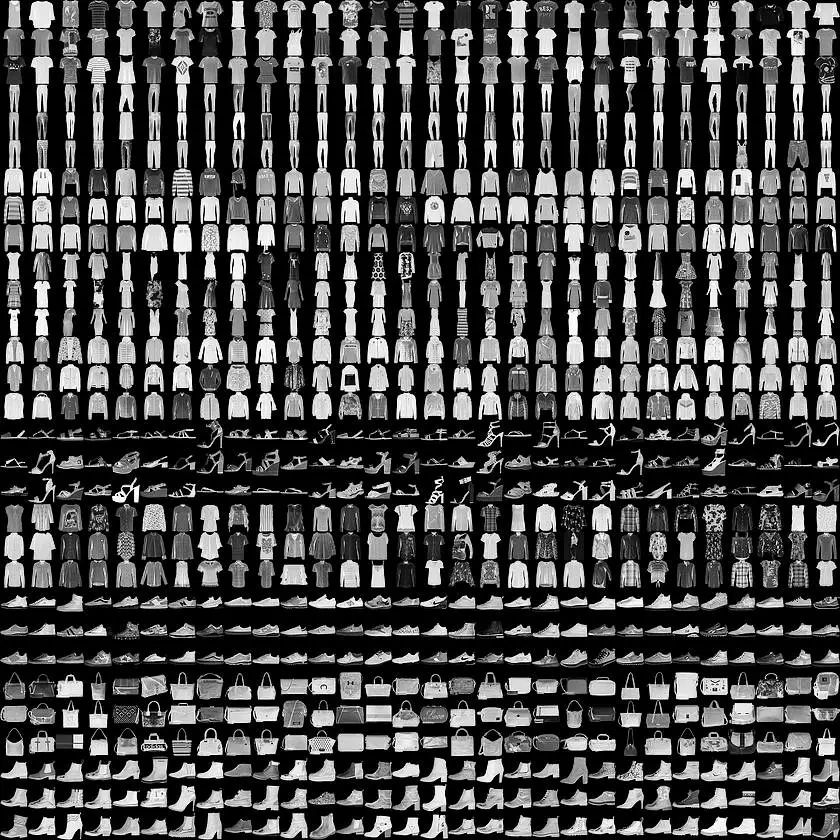

# 分別得到訓練資料集和測試資料集

(train_images, train_labels), (test_images, test_labels) = read_data()

train_images = train_images.reshape(train_images.shape[0],28,28,1)

test_images = test_images.reshape(test_images.shape[0],28,28,1)

train_labels = np_utils.to_categorical(train_labels)

test_labels = np_utils.to_categorical(test_labels)

class_names = ['短袖圓領T恤', '褲子', '套衫', '連衣裙', '外套',

'涼鞋', '襯衫', '運動鞋','包', '短靴']

# 訓練影象縮放255,在0 和 1 的範圍

train_images = train_images / 255.0

# 測試影象縮放

test_images = test_images / 255.0

import os

import numpy as np

from sklearn.model_selection import train_test_split

from keras.preprocessing import image

from keras.utils import np_utils

from keras.utils.vis_utils import plot_model

import keras

from keras.models import Sequential

from keras.layers import Dense, Activation, Dropout, Flatten, Conv2D, MaxPooling2D

from keras.layers.normalization import BatchNormalization

from tensorflow.keras.layers import BatchNormalization

from keras.models import Sequential, model_from_yaml, load_model

from keras.layers import Dense, Conv2D, Flatten, Dropout, MaxPooling2D

from keras.optimizers import SGD

from tensorflow.keras.optimizers import SGD

from keras.optimizers import adam_v2

AlexNet= Sequential()

#Layer 1

AlexNet.add(Conv2D(filters=96, input_shape=(28,28,1), kernel_size=(11,11), strides=(4,4), padding='same'))

AlexNet.add(BatchNormalization())

AlexNet.add(Activation('relu'))

AlexNet.add(MaxPooling2D(pool_size=(2,2), strides=(2,2), padding='same'))

#Layer 2

#2nd Convolutional Layer

AlexNet.add(Conv2D(filters=256, kernel_size=(5, 5), strides=(1,1), padding='same'))

AlexNet.add(BatchNormalization())

AlexNet.add(Activation('relu'))

AlexNet.add(MaxPooling2D(pool_size=(2,2), strides=(2,2), padding='same'))

#Layer 3

AlexNet.add(Conv2D(filters=384, kernel_size=(3,3), strides=(1,1), padding='same'))

AlexNet.add(BatchNormalization())

AlexNet.add(Activation('relu'))

#Layer 4

AlexNet.add(Conv2D(filters=384, kernel_size=(3,3), strides=(1,1), padding='same'))

AlexNet.add(BatchNormalization())

AlexNet.add(Activation('relu'))

#Layer 5

AlexNet.add(Conv2D(filters=256, kernel_size=(3,3), strides=(1,1), padding='same'))

AlexNet.add(BatchNormalization())

AlexNet.add(Activation('relu'))

AlexNet.add(MaxPooling2D(pool_size=(2,2), strides=(2,2), padding='same'))

#####

AlexNet.add(Flatten())

#Fully Connected Layer 1

AlexNet.add(Dense(1000, input_shape=(28,28,1)))

AlexNet.add(BatchNormalization())

AlexNet.add(Activation('relu'))

AlexNet.add(Dropout(0.4))

#Fully Connected Layer 2

AlexNet.add(Dense(1000))

AlexNet.add(BatchNormalization())

AlexNet.add(Activation('relu'))

AlexNet.add(Dropout(0.4))

#Fully Connected Layer 3

AlexNet.add(Dense(500))

AlexNet.add(BatchNormalization())

AlexNet.add(Activation('relu'))

AlexNet.add(Dropout(0.4))

AlexNet.summary()

#Output Layer

AlexNet.add(Dense(10))

AlexNet.add(BatchNormalization())

AlexNet.add(Activation('softmax'))

AlexNet.summary()

#adam = Adam(lr=0.001)

AlexNet.compile(loss = 'categorical_crossentropy', optimizer= 'sgd', metrics=['accuracy'])

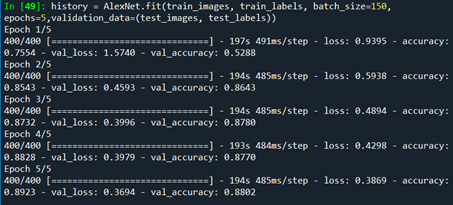

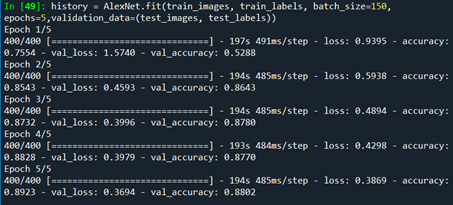

history = AlexNet.fit(train_images, train_labels, batch_size=150, epochs=5,validation_data=(test_images, test_labels))

AlexNet 執行結果

AlexNet訓練的結果,訓練和測試正確率分別達到了0.89和0.88,是個十分裡想的結果。